Blog: June 3, 2025, Eleventh Week Honors Seminar by Prof. Dr. Alessandra Zarcone

Authors: Malte Clas, Harshavardhan Patil

“It is necessary to create sober, patient people who do not despair in the face of the worst horrors and who do not become exuberant with every silliness.”

– Antonio Gramsci 1891-1937

In a world buzzing with AI Hype and unsettling horror stories, this quote from Antonio Gramsci spearheads into a surprisingly relevant mindset.

Public discussions about artificial intelligence often swing wildly between outright panic mode to unchecked optimism, leaving little room for balanced perspectives. But what if true optimism isn’t about ignoring the risks, but about critically engaging with them while still believing in our power to shape better outcomes? This is the essence captured in the final line of Gramsci’s quote:

“Pessimism of the intelligence, optimism of the will”

In a Nutshell

This week’s session threw us into how we can approach artificial intelligence with both critical awareness and constructive optimism. Starting with a basic overview of AI’s main capabilities, including classification, generation, and interaction, we explored what AI actually does and how it learns. A key focus was the tension between technological progress and ethical responsibility. From there, the discussion shifted to the societal implications of these technologies, especially when they are used in sensitive areas like hiring or education.

One particularly engaging part of the session involved two use cases: an AI-based CV screening tool and an online tutoring assistant. These examples sparked deeper conversation about what could go wrong, whether through biased data, lack of transparency, or over reliance on automated decisions. It became clear that genuine optimism about AI doesn’t mean turning a blind eye to these risks; rather, it involves actively questioning how we can design and use technology in ways that reflect human values and safeguard fundamental rights.

This led to questions about whose values AI systems are aligned with, and what “safe” AI really means in practice. The European AI Act served as a concrete example of how governments are beginning to regulate AI systems based on their potential risks.

The Deep Dive

One important realization came when discussing the situation of a CV classifier and the AI tutor. While these tools seem helpful on the surface, they can easily perpetuate unfairness if their training data contains historical biases or if users put too much faith in AI’s automated judgments. This sparked reflection on how optimism in the context of AI is not about assuming things will go well, but about staying engaged and making sure these systems are used responsibly.

To further explore the point, AI’s outputs are deeply tied to its training data and the values embedded within that data. The AI doesn’t operate in a void per say; it mirrors the patterns and assumptions of its inputs, which can, unfortunately, lead to biased or even discriminatory outcomes. One such example came up when looking at how an AI might associate doctors with “he” and nurses with “she” based on biased training data, or how AI text generation could produce/perpetuate harmful stereotypes.

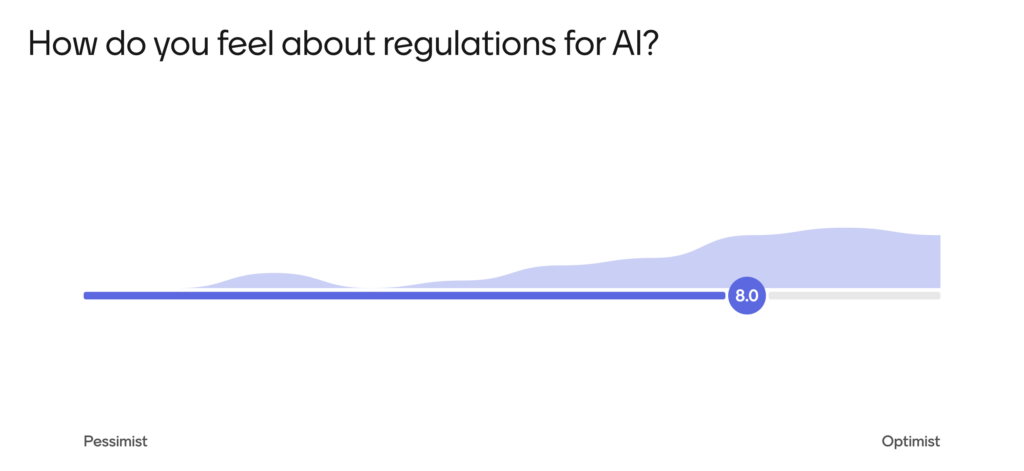

The critical need for AI regulations on the fair use of AI is very much reflected in our Menti meter poll.

When asked about our attitudes towards AI regulations—such as the European AI Act—or the complete deregulation of AI development, our group’s average strongly leaned towards regulation. This belief in the potential for thoughtful regulation to steer AI positively was very well demonstrated.

Outside The Seminar Room

The ideas discussed are already part of daily life. Automated systems are used to answer customer questions or support students in writing their blog entries. These tools often appear neutral, but their actual impact is shaped by the data they’re fed and the values guiding their design. And if we are not careful, they can reinforce existing inequalities.

The European AI Act is one real-world example of how optimism can take shape through policy. It shows that regulation is not about stopping progress but about guiding it in a responsible way. Optimism here means believing that better outcomes are possible if we stay informed and involved.

Optimism Much?

Optimism is often seen as a personal mindset, something about staying positive even when things go wrong. But in the context of AI, optimism becomes something more active. It means staying informed, asking questions, and not giving up responsibility just because a system appears to be too complex or out of control.

Optimism and critical thinking are not in conflict when it comes to AI. They rely on and support each other. This perspective can shape how we approach this technology in everyday life. Instead of blindly trusting digital tools or rejecting them out of fear, we can stay engaged and aware of how these systems affect and are affected not only by ourselves but also others. Optimism, in this sense, is about believing that better outcomes are possible when we take part in shaping them. Or to reframe on a more abstract level, as mentioned by Antonio Gramsci: “Pessimism of the intelligence, optimism of the will”.

The Road Ahead

Although many questions and answers were discussed in our session, certain unresolved issues compel us to either increase our engagement or maintain vigilant oversight in the future.

- 🧭As the presentation pointed out, “Whose values are we aligning our AIs to?” How can we ensure the AI is as neutral as possible in its development and deployment?

- ⚖️We touched upon “Doom Mongering” versus “realism” in AI discourse. What are effective strategies for fostering more nuanced public conversations about AI’s risks and benefits?

- 🧑🤝🧑Given the concerns about bias in datasets, what innovative approaches can ensure AI systems are trained on more equitable and representative data from the outset?

- 🏛️How can regulation like the EU AI Act keep pace with the rapid evolution of AI technology without stifling innovation?

- 📢In An age of easily dispersible misinformation, How can awareness about potential bias of AI be spread?

Helpful Resources

Here are a few resources that can help you dive deeper, that we highly recommend!

- 📖 Book/Work:

- Explore the work of Dr. Joy Buolamwini on algorithmic bias, such as projects by the Algorithmic Justice League or the documentary “Coded Bias.”

- The book “Atlas of AI” by Kate Crawford

- 📃 Research Paper:

- Abid, A., Farooqi, M., & Zou, J. (2021). Persistent Anti-Muslim Bias in Large Language Models. Proceedings of the 2021 AAAI/ACM Conference on AI, Ethics, and Society.

- 💻 Website:

- The official EU AI Act portal for up-to-date information: https://artificialintelligenceact.eu/

- DAIR Statement on the AI Pause letter (https://www.dair-institute.org/blog/letter-statement-March2023/)

- 📑Article/Statement:

- “Statement from the listed authors of Stochastic Parrots on the ‘AI pause’ letter” by Timnit Gebru, Emily M. Bender, et al. for a critical perspective on AI development.

- 💭 Quote Source: Gramsci, A. (1929-1930). Letters from Prison. (For the source of the central quote )

Leave a Reply