08.05.2025 Kubernetes¶

Docker installation¶

Für unseren Kubernetes-Teil wurde auf dem Cluster zuerst Docker installiert. Dies wurde für den Master und die 4 Clients jeweils ausgeführt:

sudo apt update

sudo apt install -y docker.io

sudo systemctl enable docker –now Anschließend wurde der Python Code auf die Kluster rüber kopiert und im Docker gestartet: scp -r .\python\master rtlabor@cluster4x4.informatik.tha.de:/home/rtlabor/python

docker build -t morse-api .

docker run –rm -p 8080:8080 morse -api

Der Docker Teil hat letztendlich funktioniert.

Kubernetes¶

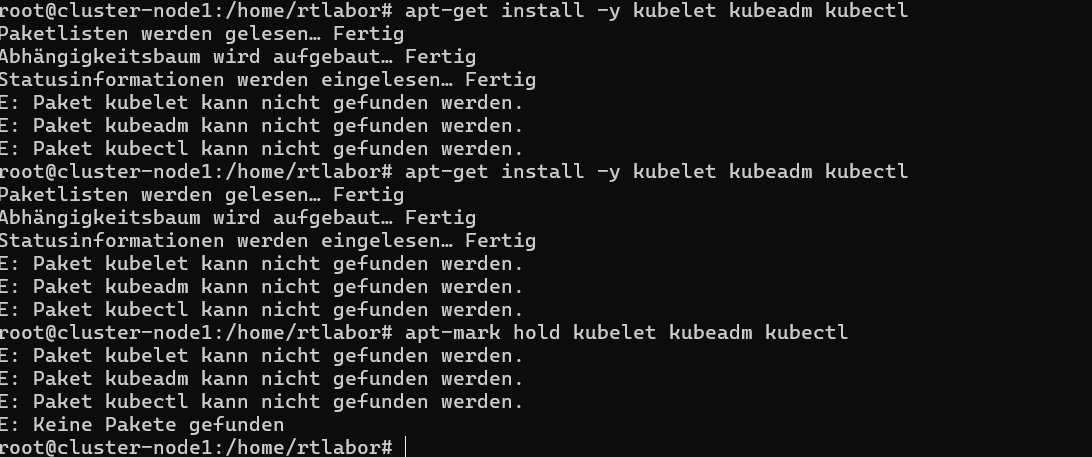

Bei Kubernetes sind am Ende noch Fehler aufgetreten:

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.33/deb/Release.key | sudo gpg –dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo ‚deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.33/deb/ /‘ | sudo tee /etc/apt/sources.list.d/kubernetes.list

apt-get update

apt-get install -y kubelet kubeadm kubectl

apt-mark hold kubelet kubeadm kubectl Dies führte bereits zu Fehlern, da die Pakete trotz installation teilweise nicht gefunden wurden.

dpkg -S swapoff

sudo apt update

sudo apt install –reinstall -y util-linux

echo $PATH

Kubeadm¶

systemctl enable –now kubelet

Auf dem Master:

sudo kubeadm init –pod-network-cidr=10.244.0.0/16 Auch dieser Befehl konnte auf dem Master nicht ausgeführt werden:

[init] Using Kubernetes version: v1.33.0 [preflight] Running pre-flight checks W0504 18:43:04.944344 159203 checks.go:1065] [preflight] WARNING: Couldn’t create the interface used for talking to the container runtime: failed to create new CRI runtime service: validate service connection: validate CRI v1 runtime API for endpoint „unix:///var/run/containerd/containerd.sock“: rpc error: code = Unimplemented desc = unknown service runtime.v1.RuntimeService [WARNING Swap]: swap is supported for cgroup v2 only. The kubelet must be properly configured to use swap. Please refer to https://kubernetes.io/docs/concepts/architecture/nodes/#swap-memory, or disable swap on the node error execution phase preflight: [preflight] Some fatal errors occurred: [ERROR FileExisting-losetup]: losetup not found in system path [preflight] If you know what you are doing, you can make a check non-fatal with –ignore-preflight-errors=… To see the stack trace of this error execute with –v=5 or higher

=> Container Runtime Problem, welches durch Änderungen in der /etc/containerd/config.toml lösen konnten:

[plugins.“io.containerd.grpc.v1.cri“.containerd.runtimes.runc.options]

SystemdCgroup = true

Danach wurde per systemctl restart containerd das Containerd neu geladen.

Des Weiteren hat losetup gefehlt, welches zu uti-linux gehört:

sudo apt-get install -y util-linux Swap wird von Kubernetes nur unter bestimmten Bedingungen unterstützt. Deshalb haben wir es wie in der Warning beschrieben deaktiviert:

sudo swapoff -a

sudo sed -i ‚/ swap / s/^/#/‘ /etc/fstab

Der Befehl - sudo kubeadm init –pod-network-cidr=10.244.0.0/16 ging dann und gab folgenden Output zurück:

kubeadm join 141.82.48.160:6443 –token qst86j.blwfc3cimvqk7h7j

–discovery-token-ca-cert-hash sha256:059ac63b01a1fd33d56c477aa7440fe8ee8377b201c6f7ae7276b59c6f4f4fa4

Dadurch können Kubernetes-Nodes an das bestehende Cluster hinzugefügt werden. Durch den join Befehl wird der Node mit dem Master verbunden. Der Token dient zur Authentifikation und der hash Wert als Sicherheitsmechanismus.

Kubectl¶

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

kubectl apply -f client-deployment.yaml

Ergab folgenden Fehler:

error validating „deployment.yaml“: error validating data: failed to download openapi: Get „http://localhost:8080/openapi/v2?timeout=32s“: dial tcp [::1]:8080: connect: connection refused; if you choose to ignore these errors, turn validation off with –validate=false

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubectl get nodes

kubelet¶

apt update

apt install -y apt-transport-https curl

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key add - apt-add-repository „deb https://apt.kubernetes.io/ kubernetes-xenial main“

apt update

apt install -y kubeadm kubelet kubectl

sudo swapoff -a

sudo systemctl enable kubelet

sudo systemctl start kubelet

sudo apt install -y software-properties-common

Gab folgende Fehler: Fehl:7 https://packages.cloud.google.com/apt kubernetes-xenial Release 404 Not Found [IP: 142.250.186.142 443] Xenial wird seit der Ubuntu Version 16 nicht mehr genutzt.

sudo nano /etc/apt/sources.list.d/kubernetes.list Hier: kubernetes-xenial durch kubernetes-jammy erstetzen.

sudo apt update

Abschließender Stand:

sudo ufw status Status: active

To Action From

22/tcp ALLOW 141.82.0.0/16

sudo ufw allow 6443/tcp

kubectl get nodes

In Text:

In Text:

NAME STATUS ROLES AGE VERSION cluster-node1 NotReady39s v1.33.0 cluster-node2 NotReady 29s v1.33.0 cluster-node3 NotReady 62s v1.33.0 cluster-node4 NotReady 66s v1.33.0 cluster4x4 Ready control-plane 3h46m v1.33.0

Die Cluster waren an sich da, leider waren diese im NotReady-Zustand.kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE calico-kube-controllers-75cd4cc5b9-r8sjz 0/1 CrashLoopBackOff 38 (49s ago) 3h34m calico-node-d84nr 0/1 Running 2 (19s ago) 7m23s calico-node-m2m5t 0/1 Init:0/3 0 6m56s calico-node-svbqd 1/1 Running 1 (95s ago) 6m46s calico-node-vw5j8 0/1 Running 0 3h34m calico-node-xs68k 0/1 Running 1 (15s ago) 7m19s coredns-674b8bbfcf-22x5f 1/1 Running 0 3h53m coredns-674b8bbfcf-2cjrk 1/1 Running 0 3h53m etcd-cluster4x4 1/1 Running 30 (3h58m ago) 3h53m kube-apiserver-cluster4x4 1/1 Running 30 (3h58m ago) 3h53m kube-controller-manager-cluster4x4 1/1 Running 0 3h53m kube-proxy-2jb2d 0/1 CrashLoopBackOff 4 (39s ago) 7m23s kube-proxy-4j49v 0/1 CrashLoopBackOff 49 (4m53s ago) 3h53m kube-proxy-cgxjg 1/1 Running 4 (115s ago) 6m46s kube-proxy-r6vsc 1/1 Running 5 (90s ago) 7m19s kube-proxy-r9z97 0/1 ContainerCreating 0 6m56s kube-scheduler-cluster4x4 1/1 Running 30 (3h57m ago) 3h53m

Der Calico-Teil (Netzwerk-Plugin) hatte ebenfalls Probleme und hat sich mit einen crashLoopBackOff immer wieder neu gestartet. Die einzelnen Logs haben uns hierbei auch nicht wirklich weiter geholfen:

Kube-System & Kube-Proxy:

I0506 19:32:24.367603 1 server_linux.go:63] „Using iptables proxy“

I0506 19:32:24.537817 1 server.go:715] „Successfully retrieved node IP(s)“ IPs=[„141.82.48.160“]

I0506 19:32:24.541595 1 conntrack.go:60] „Setting nf_conntrack_max“ nfConntrackMax=262144

E0506 19:32:24.541701 1 server.go:245] „Kube-proxy configuration may be incomplete or incorrect“ err=“nodePortAddresses is unset; NodePort connections will be accepted on all local IPs. Consider using --nodeport-addresses primary“

I0506 19:32:24.587770 1 server.go:254] „kube-proxy running in dual-stack mode“ primary ipFamily=“IPv4“

I0506 19:32:24.587803 1 server_linux.go:449] „Detect-local-mode set to ClusterCIDR, but no cluster CIDR specified for primary IP family“ ipFamily=“IPv4“ clusterCIDRs=null

I0506 19:32:24.587814 1 server_linux.go:145] „Using iptables Proxier“

I0506 19:32:24.600262 1 proxier.go:243] „Setting route_localnet=1 to allow node-ports on localhost; to change this either disable iptables.localhostNodePorts (–iptables-localhost-nodeports) or set nodePortAddresses (–nodeport-addresses) to filter loopback addresses“ ipFamily=“IPv4“

I0506 19:32:24.601135 1 server.go:516] „Version info“ version=“v1.33.0“

I0506 19:32:24.601376 1 server.go:518] „Golang settings“ GOGC=““ GOMAXPROCS=““ GOTRACEBACK=““

I0506 19:32:24.603917 1 config.go:440] „Starting serviceCIDR config controller“

I0506 19:32:24.603965 1 shared_informer.go:350] „Waiting for caches to sync“ controller=“serviceCIDR config“

I0506 19:32:24.604023 1 config.go:199] „Starting service config controller“

I0506 19:32:24.604113 1 shared_informer.go:350] „Waiting for caches to sync“ controller=“service config“

I0506 19:32:24.604056 1 config.go:105] „Starting endpoint slice config controller“

I0506 19:32:24.604181 1 shared_informer.go:350] „Waiting for caches to sync“ controller=“endpoint slice config“

I0506 19:32:24.604235 1 config.go:329] „Starting node config controller“

I0506 19:32:24.604301 1 shared_informer.go:350] „Waiting for caches to sync“ controller=“node config“

I0506 19:32:24.705016 1 shared_informer.go:357] „Caches are synced“ controller=“node config“

I0506 19:32:24.705028 1 shared_informer.go:357] „Caches are synced“ controller=“endpoint slice config“

I0506 19:32:24.705027 1 shared_informer.go:357] „Caches are synced“ controller=“service config“

I0506 19:32:24.705059 1 shared_informer.go:357] 0“Caches are synced“ controller=“serviceCIDR config“

Wurde anscheinend normal gestartet. Es gibt eine Warning bei –nodeport-addresses, da diese für alle localen IPs akzeptiert wird.

Calico-node:

Name: calico-node-m2m5t Namespace: kube-system Priority: 2000001000 Priority Class Name: system-node-critical Service Account: calico-node Node: cluster-node1/192.168.0.1 Start Time: Tue, 06 May 2025 21:25:19 +0200 Labels: controller-revision-hash=5475bd5f8c k8s-app=calico-node pod-template-generation=1 Annotations:Status: Pending IP: 192.168.0.1 IPs: IP: 192.168.0.1 Controlled By: DaemonSet/calico-node Init Containers: upgrade-ipam: Container ID: Image: docker.io/calico/cni:v3.27.0 Image ID: Port: Host Port: Command: /opt/cni/bin/calico-ipam -upgrade State: Waiting Reason: PodInitializing Ready: False Restart Count: 0 Environment Variables from: kubernetes-services-endpoint ConfigMap Optional: true Environment: KUBERNETES_NODE_NAME: (v1:spec.nodeName) CALICO_NETWORKING_BACKEND: <set to the key ‚calico_backend‘ of config map ‚calico-config‘> Optional: false Mounts: /host/opt/cni/bin from cni-bin-dir (rw) /var/lib/cni/networks from host-local-net-dir (rw) /var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-qk8wq (ro) install-cni: Container ID: Image: docker.io/calico/cni:v3.27.0 Image ID: Port: Host Port: Command: /opt/cni/bin/install State: Waiting Reason: PodInitializing Ready: False Restart Count: 0 Environment Variables from: kubernetes-services-endpoint ConfigMap Optional: true Environment: CNI_CONF_NAME: 10-calico.conflist CNI_NETWORK_CONFIG: <set to the key ‚cni_network_config‘ of config map ‚calico-config‘> Optional: false KUBERNETES_NODE_NAME: (v1:spec.nodeName) CNI_MTU: <set to the key ‚veth_mtu‘ of config map ‚calico-config‘> Optional: false SLEEP: false Mounts: /host/etc/cni/net.d from cni-net-dir (rw) /host/opt/cni/bin from cni-bin-dir (rw) /var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-qk8wq (ro) mount-bpffs: Container ID: Image: docker.io/calico/node:v3.27.0 Image ID: Port: Host Port: Command: calico-node -init -best-effort State: Waiting Reason: PodInitializing Ready: False Restart Count: 0 Environment: Mounts: /nodeproc from nodeproc (ro) /sys/fs from sys-fs (rw) /var/run/calico from var-run-calico (rw) /var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-qk8wq (ro) Containers: calico-node: Container ID: Image: docker.io/calico/node:v3.27.0 Image ID: Port: Host Port: State: Waiting Reason: PodInitializing Ready: False Restart Count: 0 Requests: cpu: 250m Liveness: exec [/bin/calico-node -felix-live -bird-live] delay=10s timeout=10s period=10s #success=1 #failure=6 Readiness: exec [/bin/calico-node -felix-ready -bird-ready] delay=0s timeout=10s period=10s #success=1 #failure=3 Environment Variables from: kubernetes-services-endpoint ConfigMap Optional: true Environment: DATASTORE_TYPE: kubernetes WAIT_FOR_DATASTORE: true NODENAME: (v1:spec.nodeName) CALICO_NETWORKING_BACKEND: <set to the key ‚calico_backend‘ of config map ‚calico-config‘> Optional: false CLUSTER_TYPE: k8s,bgp IP: autodetect CALICO_IPV4POOL_IPIP: Always CALICO_IPV4POOL_VXLAN: Never CALICO_IPV6POOL_VXLAN: Never FELIX_IPINIPMTU: <set to the key ‚veth_mtu‘ of config map ‚calico-config‘> Optional: false FELIX_VXLANMTU: <set to the key ‚veth_mtu‘ of config map ‚calico-config‘> Optional: false FELIX_WIREGUARDMTU: <set to the key ‚veth_mtu‘ of config map ‚calico-config‘> Optional: false CALICO_DISABLE_FILE_LOGGING: true FELIX_DEFAULTENDPOINTTOHOSTACTION: ACCEPT FELIX_IPV6SUPPORT: false FELIX_HEALTHENABLED: true Mounts: /host/etc/cni/net.d from cni-net-dir (rw) /lib/modules from lib-modules (ro) /run/xtables.lock from xtables-lock (rw) /sys/fs/bpf from bpffs (rw) /var/lib/calico from var-lib-calico (rw) /var/log/calico/cni from cni-log-dir (ro) /var/run/calico from var-run-calico (rw) /var/run/nodeagent from policysync (rw) /var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-qk8wq (ro) Conditions: Type Status PodReadyToStartContainers False Initialized False Ready False ContainersReady False PodScheduled True Volumes: lib-modules: Type: HostPath (bare host directory volume) Path: /lib/modules HostPathType: var-run-calico: Type: HostPath (bare host directory volume) Path: /var/run/calico HostPathType: var-lib-calico: Type: HostPath (bare host directory volume) Path: /var/lib/calico HostPathType: xtables-lock: Type: HostPath (bare host directory volume) Path: /run/xtables.lock HostPathType: FileOrCreate sys-fs: Type: HostPath (bare host directory volume) Path: /sys/fs/ HostPathType: DirectoryOrCreate bpffs: Type: HostPath (bare host directory volume) Path: /sys/fs/bpf HostPathType: Directory nodeproc: Type: HostPath (bare host directory volume) Path: /proc HostPathType: cni-bin-dir: Type: HostPath (bare host directory volume) Path: /opt/cni/bin HostPathType: cni-net-dir: Type: HostPath (bare host directory volume) Path: /etc/cni/net.d HostPathType: cni-log-dir: Type: HostPath (bare host directory volume) Path: /var/log/calico/cni HostPathType: host-local-net-dir: Type: HostPath (bare host directory volume) Path: /var/lib/cni/networks HostPathType: policysync: Type: HostPath (bare host directory volume) Path: /var/run/nodeagent HostPathType: DirectoryOrCreate kube-api-access-qk8wq: Type: Projected (a volume that contains injected data from multiple sources) TokenExpirationSeconds: 3607 ConfigMapName: kube-root-ca.crt Optional: false DownwardAPI: true QoS Class: Burstable Node-Selectors: kubernetes.io/os=linux Tolerations: :NoSchedule op=Exists :NoExecute op=Exists CriticalAddonsOnly op=Exists node.kubernetes.io/disk-pressure:NoSchedule op=Exists node.kubernetes.io/memory-pressure:NoSchedule op=Exists node.kubernetes.io/network-unavailable:NoSchedule op=Exists node.kubernetes.io/not-ready:NoExecute op=Exists node.kubernetes.io/pid-pressure:NoSchedule op=Exists node.kubernetes.io/unreachable:NoExecute op=Exists node.kubernetes.io/unschedulable:NoSchedule op=Exists Events: Type Reason Age From Message

Normal Scheduled 12m default-scheduler Successfully assigned kube-system/calico-node-m2m5t to cluster-node1 Warning FailedCreatePodSandBox 2m23s (x46 over 12m) kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to get sandbox image „registry.k8s.io/pause:3.6“: failed to pull image „registry.k8s.io/pause:3.6“: failed to pull and unpack image „registry.k8s.io/pause:3.6“: mkdir /var/lib/containerd/io.containerd.content.v1.content/ingest/aa9bcfccf9791b4bdf04565f866baee22a55b089d2789f5344011e0f00fafdde: no such file or directory

Calico kann nicht gestartet werden, da das pause image weder gefunden noch gepullt werden kann.