The research project aims to detect vulnerabilities in C and C++ code using Large Language Models (LLMs). While LLMs have been successfully used in other areas such as natural language processing, they have not shown the same effectiveness in vulnerability analysis of program code. This is because the models tend to adopt the coding style of the projects from which the training data is derived, leading to a generalization problem.

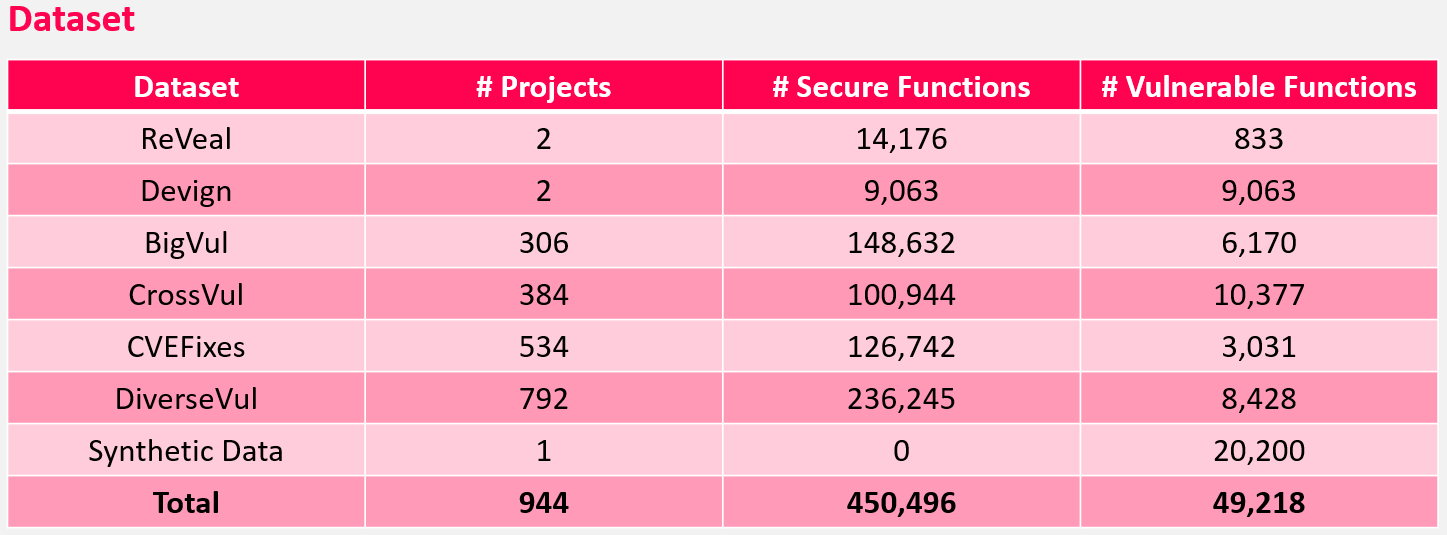

To address this issue, the project focuses on processing C and C++ code, aiming to detect vulnerabilities in individual functions with a maximum of 512 tokens. The dataset for the project has been compiled from various datasets of previous research papers and supplemented with synthetic data generated using OpenAI's GPT models. These synthetic data increase the number of training data with vulnerabilities and help prevent the models from overly adapting to the coding styles of individual projects.

The project exclusively uses models pre-trained specifically with code, such as GPT, T5, and BERT. A detailed comparative analysis aims to determine which models deliver the best performance and which approaches can help address the generalization problem.

- You can find more information on the Showcase website.

Supervisor:

Phone: | +49 821 5586-3512 |

Students:

Philipp Gaag

Michael Janzer

Elias Schuler