Types of prompting

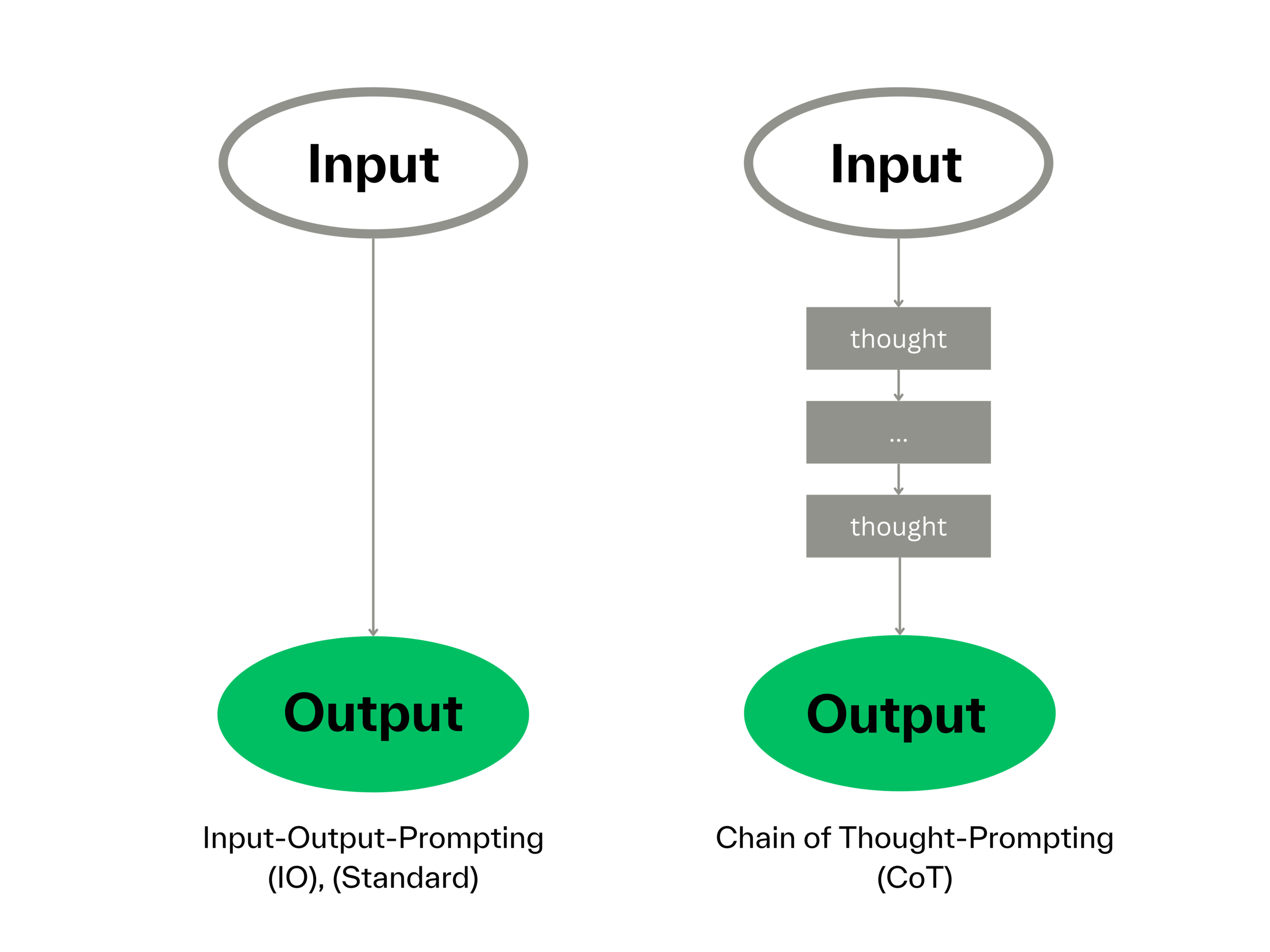

In the article ChatGPT prompting competence, we describe that the central concern of prompt design or prompt engineering is to formulate an optimal input that enables the language model to cope with the task at hand in the best possible way. On this page, we look at a specific form, known as “chain of thought” prompting, or CoT prompting for short.

Chain of Thought-Prompting

Showing a solution step by step

CoT prompting is about helping the language model to think systematically about a task and develop a solution strategy. Imagine you have a series of questions that help you steer your thoughts in the right direction. These questions or prompts are linked together and guide you step by step through the process of finding an answer or solution. In CoT prompting, your input serves as a guide for the AI to gradually present a solution strategy for the given task in the output (text output). In doing so, you set a series of interconnected questions to encourage a logical flow of answers.

Translated with DeepL.com (free version)

How does it work?

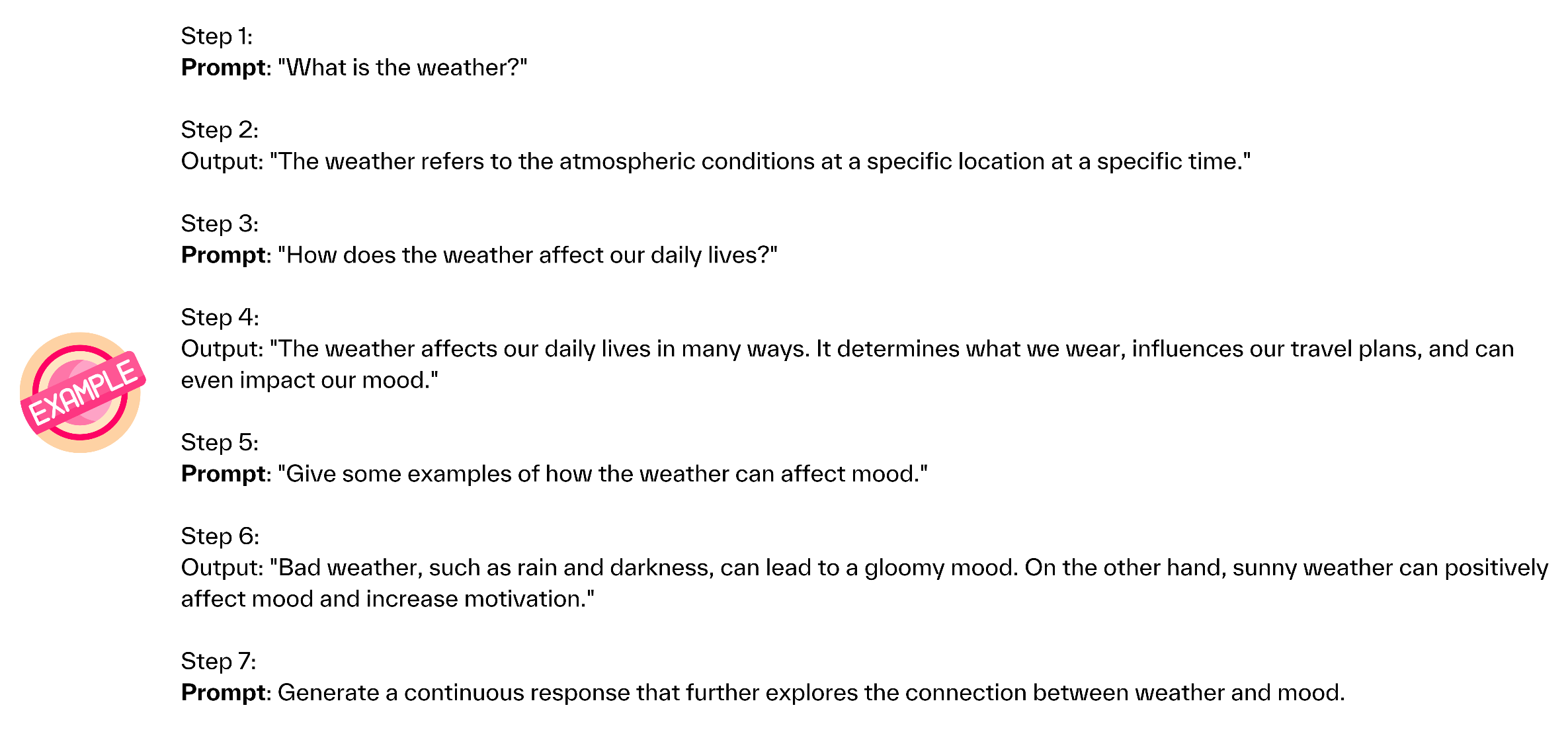

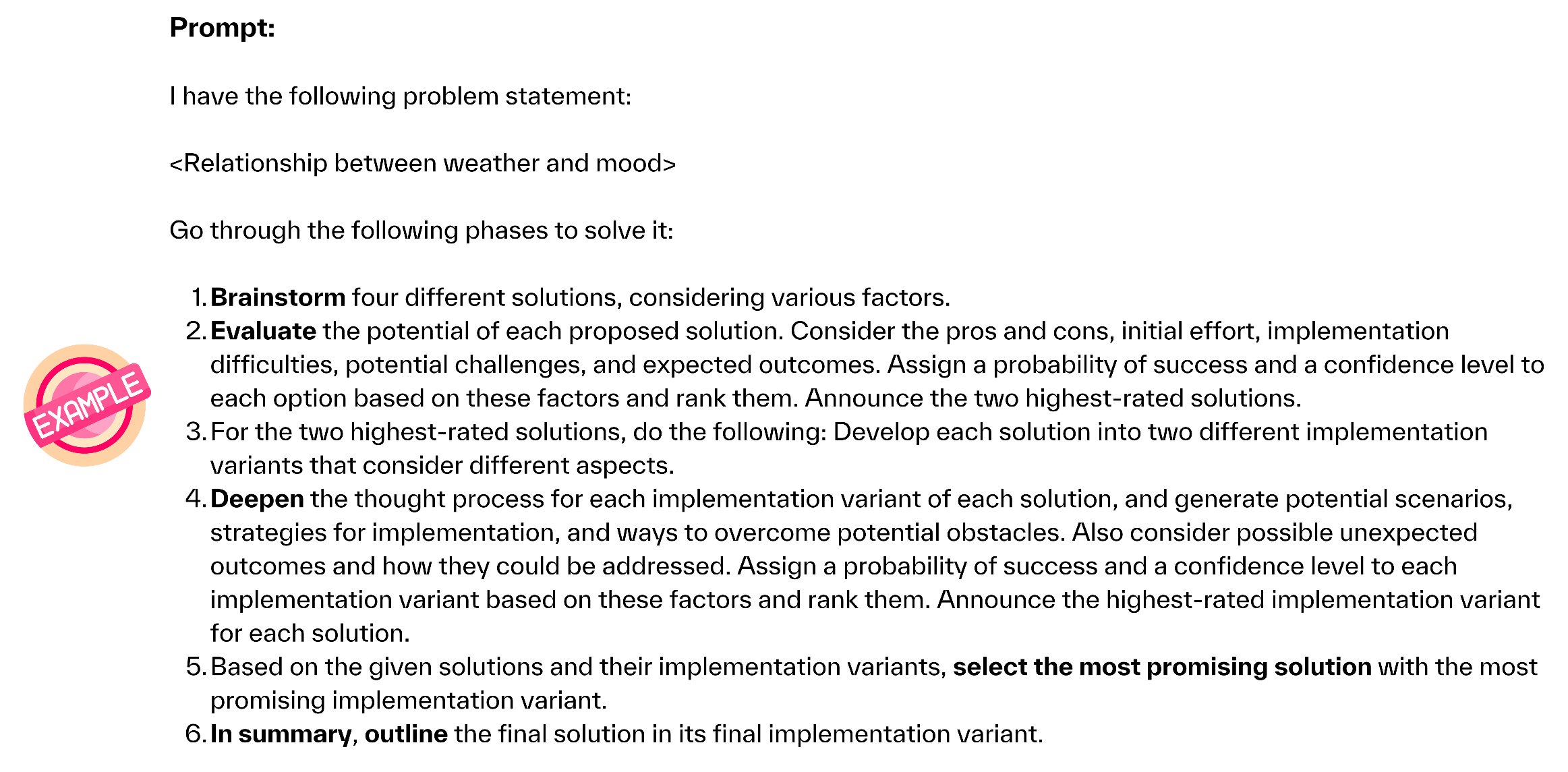

CoT prompting can be performed with ChatGPT by asking successive questions or instructions that build on the previous answers. The model is gradually given more context or information for a coherent response.

In this example, the model starts with a basic question (step 1) and then gradually builds on the previous answer by asking more advanced questions (steps 3 and 5). In this way, the model is encouraged to develop a progressive train of thought and consider the context of its previous answers to generate a coherent response.

Demonstrably better results

CoT prompting gives us an apparent insight into the AI's way of thinking by explaining its mental steps to solve a task. A supposed sequence of thoughts becomes visible. However, apart from increasing transparency in prompting, researchers from Google DeepMind and Princeton University were able to prove through experiments with various language models that the quality of results is improved by CoT prompting above a certain size of the model (Wei & Zhou 2022).

Tree of Thoughts-Prompting

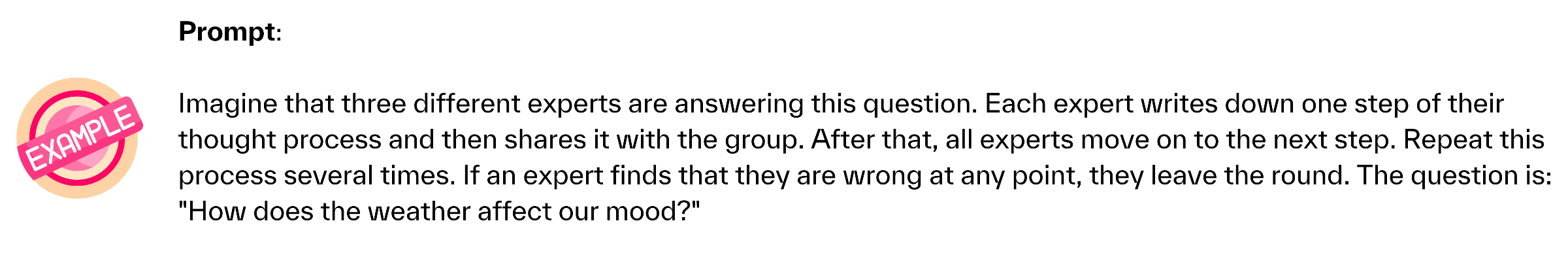

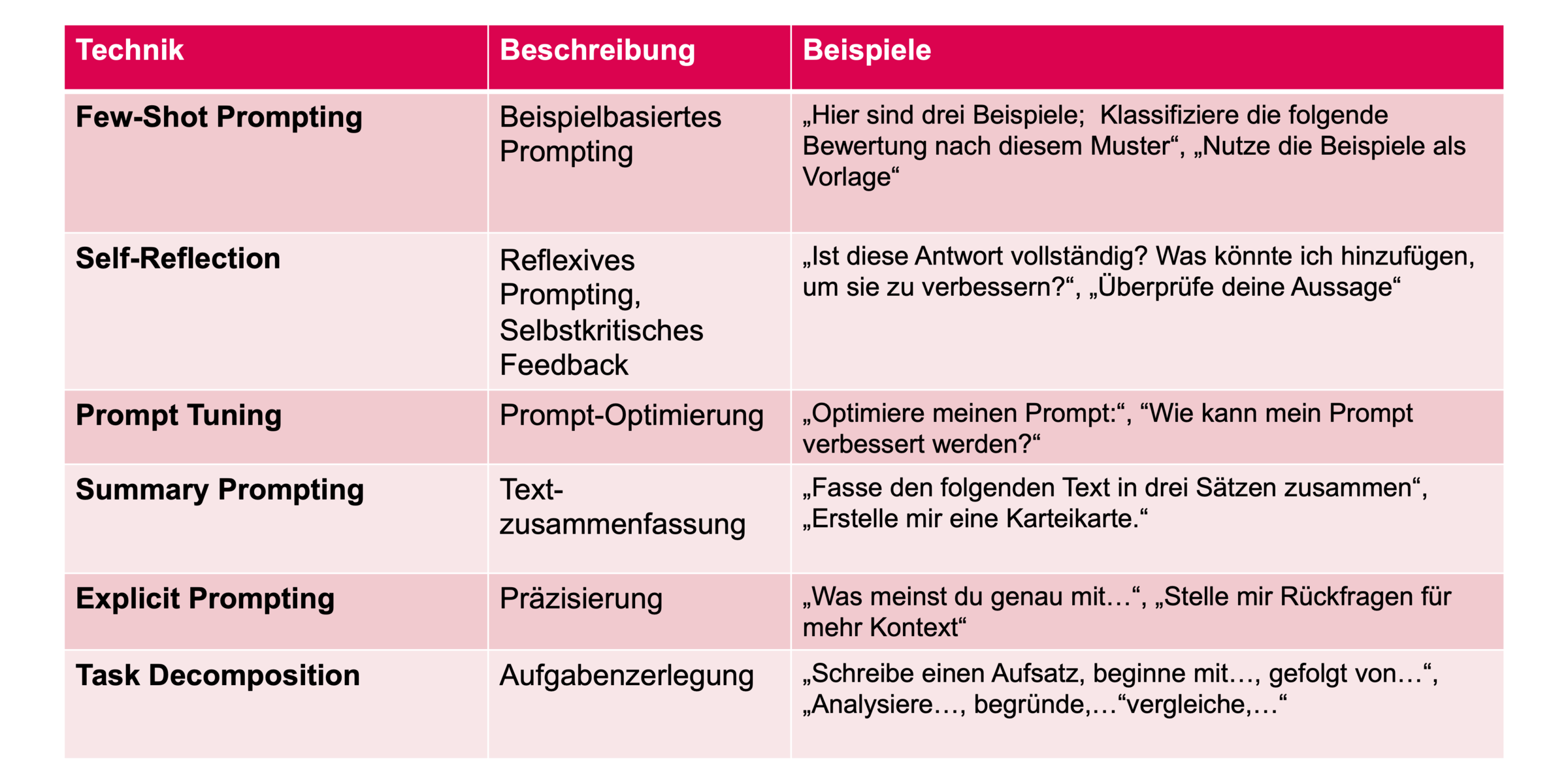

The linear approach of CoT prompting is contrasted with another framework: Tree of Thoughts prompting, or ToT prompting for short. Imagine three experts discussing the best approach to a complex problem in their industry. Each expert contributes different ideas and solutions, various options are explored, evaluated and ultimately the best decision for the solution is made. This is exactly the kind of scenario that is initiated during ToT prompting: In ToT prompting, several series of steps are followed and an evaluation scheme is also integrated. Each step is carefully reviewed, allowing for a more thorough and evaluative approach. While CoT proposes a straightforward path, ToT follows a structural model that resembles a tree and branch framework.

The ToT approach allows the model to evaluate the intermediate thoughts itself and decide whether it should continue with this path or choose another one.

ToT is inspired by the approach of solving complex thinking problems by trial and error. This technique therefore encourages the AI to explore different ideas and re-evaluate them as necessary in order to find the optimal solution.

1st example:

2nd example:

Example ToT prompt based on Reddit post “My Tree of Thoughts prompt” by user bebosbebos || Click on image to enlarge