As we are still in the development phase, we are in search for the perfect platform to do image processing on the drone. This blog entry will show you the software which needs to run on the board, identify potential modules for optimization and a benchmark of the software on different embedded boards.

Introduction

Refugees continue to cross the Mediterranean Sea fleeing from war in their home countries, risking their lives taking overcrowded boats sailing to Europe. The death toll by sailing under these risky conditions is estimated to be 16,000 in the last five years (10 deaths per day!), and it continues to increase.

Due to several political reasons, there are less ships on the water to save the drowning people. Nevertheless there are still several NGOs who try to save people from drowning. One of them is Seawatch who is in operation since 2015. Seawatch got a big ship which is equipped well to search and rescue (SAR) missions for people in distress on the sea. Due to limited sight based on the curvature of the earth and limited speed on the water they can only cover about 100 km²/hour. This is not a lot, as the area they need to observe is about 100000 km² large.

To cover larger areas, they also got a plane, the Moonbird, to search the sea. By flying at high altitudes and high speed they can cover around 6000 km² per flight.

As the plane is not always available and takes hours to arrive from Malta, they are in need for additional ways to observe large areas at sea.

SearchWing – Autonomous Drone

By building lowcost longrange drones, which get launched onship, we can enlarge the observation area around NGO ships. Using the drones and embedded image recognition, we autonomously find refugee boats on the sea and send this information to the rescue vessels.

Due to difficulty to transmit huge data (like full images) from the drone back to the ship, we need to run the image processing on the plane. Only small images of the detected boats will be send to the ship. At the ship a human operator will be in the loop of the process to double check the images and coordinate the rescue.

The current state of the hardware can be found over here: https://www.hs-augsburg.de/searchwing/?page_id=187

Requirements for the image processing platform

The embedded image processing hardware platform got a few requirements:

- Processing should be fast enough to not exceed 3 seconds.

- If the drone is flying over a boat with 50 km/h, we can see it about 35 seconds at ideal conditions. Nevertheless there are lots of situations where we can see boats for a shorter amount of time. To lower the probability to miss a ship we have to be able to detect it multiple times to validate the detection.

- Little power usage.

- The avaliable power on the drone is very limited. Thus we need a very efficient processing unit to not waste any power which is needed to run the motor of the drone.

The detection algorithms needs to fulfill a few requirements which result from problem we want to solve.

- Type of image recognition algorithm: Object detector

- A object detection algorithm allow us to detect objects in single images and verify the object by accurately track the position over multiple images in a postprocessing step. Afterwards we can extract a cut out of the boat from the image for transmission.

- The algorithm need to run at full camera resolution of the pi cam v2

- To maximize the observable area we need to fly as high as possible. At an altitude of 500 meters one pixel of an image correspond to ~ 0,2 meters. Most of the refugee boats are approximatly 10 meters long and 2 meters wide. To have enough pixels to recognize boats, we need to keep the full resolution.

- Most importantly – No false negatives allowed

- As we are directly handling human lives, we have to try at all cost to not miss any single boat in a image. We can increase the false positive rate to an extend that the system is still usable for the human operator (~ 1 false positive every 10 minutes).

Current implementation

The current prototype is build from multiple modules to implement the boat detector. As framework we use ROS, which handles the communication between the different modules, transforms 3d-data and provides a handy 3d visualization for development. The overall detection algorithm was designed under the following assumptions:

- By flying over the sea with 50-80km/h boats can be assumed to stand almost still in relation to the drone

- Waves appear and disappear over time

- Approach to detect boats

- Detect parts in the image which do not change over time

- Redetect parts by checking the same position in consecutive frames

- If something is redetected over 3 frames, we can assume this is a boat v By flying over the sea with 50-80km/h boats can be assumed to stand almost still in relation to the drone

- Waves appear and disappear over time

- Approach to detect boats

- Detect parts in the image which do not change over time

- Redetect parts by checking the same position in consecutive frames

- If something is redetected over 3 frames, we can assume this is a boat

The modules to implement this algorithm can be split up in multiple parts:

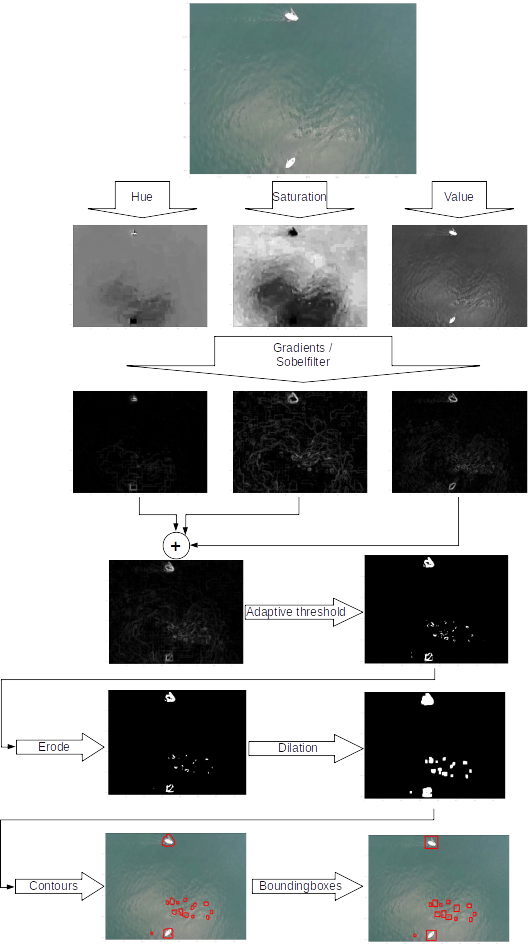

1. Boat proposal detector

The first step is to detect boat proposals in the provided camera images. This is achieved by running a series of classical image processing filters to the image. The used filters and their intermediate output can be seen in the image below:

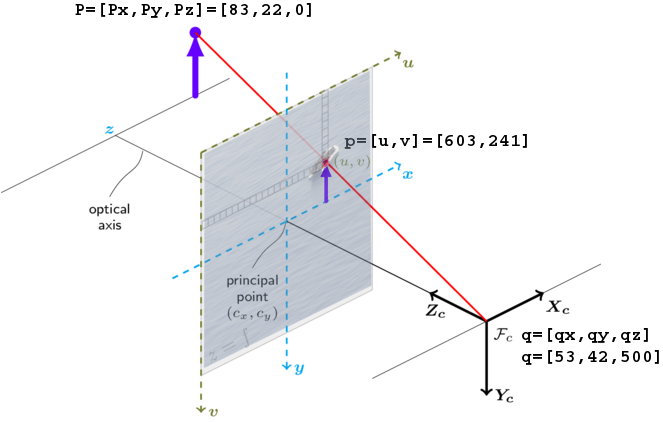

2. Proposal 3D Position calculation

Then we use the information from the drone about its current position and orientation with the pregathered intrinsic camera calibration to calculate the 3D position of the detected boat proposals. By calculating the position of boat proposals, we can try to redetect them in consecutive frames.vThen we use the information from the drone about its current position and orientation with the pregathered intrinsic camera calibration to calculate the 3D position of the detected boat proposals. By calculating the position of boat proposals, we can try to redetect them in consecutive frames.

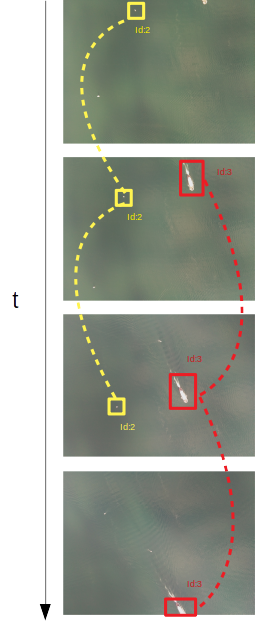

3. Tracking of the boat positions

By using a tracking algorithm we can use the positions of the boats from single frames to check for image parts in consecutive frames which do almost not move. After something is redected over 3 frames, we assume this is a boat.

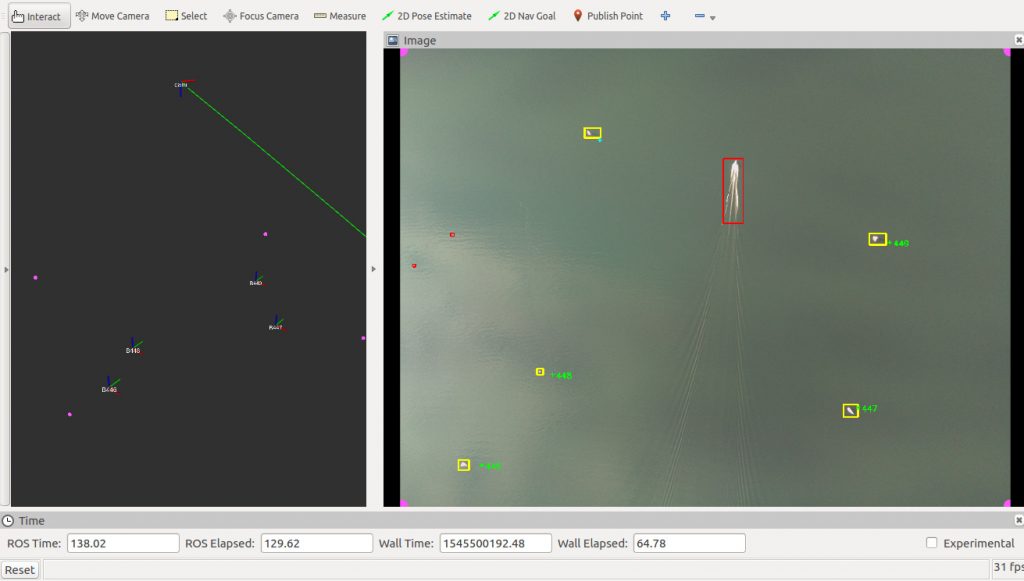

After running the 3 steps on a prerecorded dataset, the following visualization in ROS is generated.

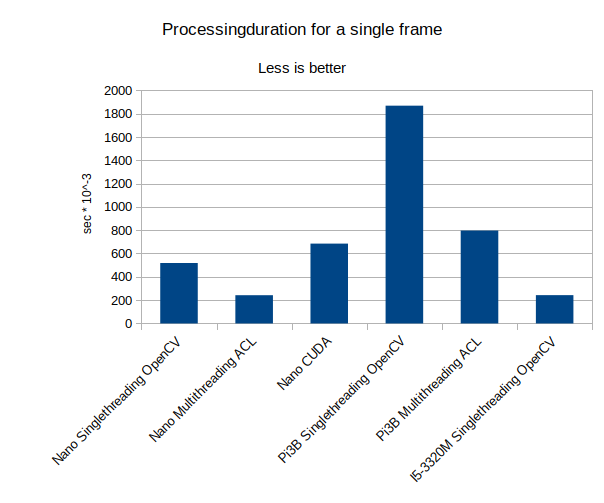

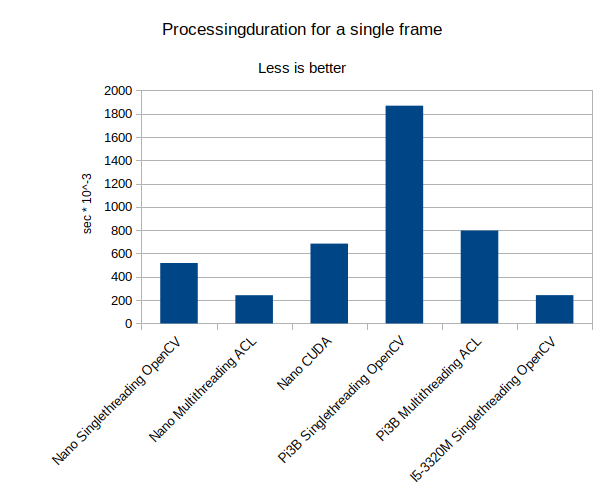

To identify potential modules for optimization on the specific embedded platform the following modulewise processing durations can be gathered:

| Modulename | Duration for a single frame [sec] |

| Boat proposal detector | 0.224 |

| Proposal 3D position calculation | 0.00005 |

| Tracking of the boat positions | 0.002 |

(Code was running on my T430 with a i5-3320M processor / OpenCV3.3)

We can clearly see, the proposal detection is the most expensive part of the algorithm. Therefore we need to put some work on this module to optimize it for the specific target platform on the drone.

As you can notice, currently we do not use any deep learning algorithms. This partly due to the fact, that deep learning algorithms are still kind of a black box which are hard to debug, in case there is a particular image with a boat which is not detected by the algorithm. To avoid these situations there was the decision to first focus on classical image recognition algorithms.

Optimization of image processing Algorithms

There are several options to speed up image processing algorithms.

CPU

- Singlethreading

This is not really accelerated code, as this is the standard case. But I wanted to put it here for the sake of completeness.

- Multithreading

By splitting up the work into several tasks, we can split up the work to multiple processors. There are different concepts to implement multithreading by using parallel programming.

- SIMD

By using SIMD technology on the CPU, we can run specific operations on multiple data objects. Most of the modern x86/x64, ARM CPUs implement this kind of technology.

GPU

- OpenGL – Shader

It is possible to use OpenGL Shaders to implement computer vision filters. The advantage of this approach is that most of the embedded boards available provide a OpenGL driver.

- OpenCL

OpenCL is a framework for writing accelerated algorithms on a wide range of processors. There are interfaces for GPUs aswell as for CPUs. As the boards we have in mind sometimes provide a GPU interface for OpenCL, I put it into this category.

- CUDA

CUDA is a propritary OpenCL like interface from Nvidia to accelerate algorithms on the CUDA-Cores of Nvidia GPUs.

Dedicated hardware

Due to the demand on cheap computer vision products and the latest success of deep learning, currently many dedicated processors for deep learning are developed. These processors got performance and low power usage in mind to enable a cheap deployment of the performance hungry algorithms for products.

Benchmark platforms

For the benchmark of the image processing chain we choose the following participants:

On these platforms different accelerations are implemented for this benchmark

| Platform | Accelerations |

| Nvidia Jetson Nano | Singlethreading – SIMD / NEON – OpenCV Multithreading – SIMD / NEON – ARM Compute Library (ACL) CUDA – OpenCV |

| Raspberry Pi 3 Model B | Singlethreading – SIMD / NEON – OpenCV Multithreading – SIMD / NEON – ARM Compute Library (ACL) |

| Lenovo T430 – Core i5-3320M | Singlethreading – SIMD / SSE / AVX – OpenCV |

The following benchmark was built running the image processing chain on a preloaded image 10 times in a row and measured each duration. The first measurement was removed due to warmup of the system.

The Jetson Nano was running with the 10W setting.

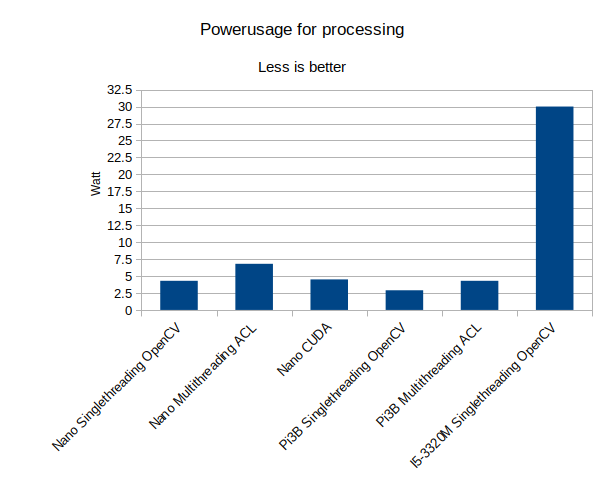

I also measured the approximated power usage by using a powermeter at the plug while using the detection framework.

The value of the T430 platform is only a estimation, as it need to power more peripherals than the other platforms.

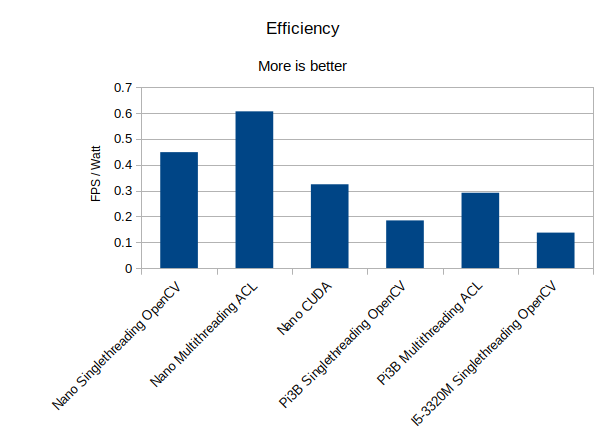

Additionally we can estimate the efficiency of the platform by estimating hypothetical Frames Per Second and take them over Watts.

Results

All the participants of the benchmark deliver performance which is above the requirement of taking a maximum time of three seconds to process a single image. Additionally the powerusage of the Jetson Nano and Raspberry Pi3 is low enough to consider them for the usage in the drone.

Performance wise the Jetson Nano board with multithreading is equal to the Core i5-3320M in singlethreading mode. But if we consider the power usage of the CPU in the T430, the Jetson Nano clearly wins. It also provides performance reserves with its CUDA cores for upcoming Deep Learning based perception algorithms.

The Raspberry Pi 3 Model B also delivers reasonably performance for its small power usage and less beefy CPU.

Comparing the efficiency of the Jetson Nano with the Raspberry Pi 3, the Jetson Nano wins being twice as efficient as the Raspberry. This might be due to the fact that the Jetson Nano got a way more up to date CPU architecture.

Regarding the performance of the CUDA implementation i received a mail from the NVIDIA support:

Unfortunately the CUDA code in OpenCV is unoptimized and wasn’t authored by NVIDIA. It also contains some bugs which is why we ship the OpenCV build included with JetPack without CUDA enabled. Since Itseez was acquired there are concerns about our patches being accepted upstream.

NVIDIA support

If you have time I recommend that you try using the filtering functions from NPP (NVIDIA Performance Primitives) or VisionWorks, they will be much faster.

I will try the latter and update the article later.

To decide which board we are going to finally use to build multiple drones, beside the performance some other things like availability, driver support, price and the future orientation of the project will play a major role.

Contribute to the development

Hackathon

We are holding a hackathon on the image recognition topic from the 26.7-28.7.2019 in Berlin. If you are interested in that check the link for more information: https://www.hs-augsburg.de/searchwing/?p=198

Longterm help

If you want to help on other bases, we are also happy to receive any help. We are actively looking for people who want to help us out in the domains of computer vision(deep learning, classical approaches), tracking, ROS / MAVLINK or Ardupilot / PX4.

The code can be found here: https://gitlab.com/searchwing/development/boatdetectorcpp

If you are interested in helping out on the image processing, or even want to write a scientific work (bachelor / master thesis), please hit me up via

If you are interested in contributing to other parts of the project, please write a mail to one of the other teammembers you can find in the teamoverpoint.